August 8, 2024 · 7 min read

Forecasted Metrics: Benchmark

We have conducted a comprehensive comparison of our recently introduced feature Forecasted Metrics for time series forecasting with several publicly available tools and solutions in this area. Among the noteworthy entities in this space is NIXTLA, an open-source company specializing in time series research and deployment, recognized for their exceptional efforts in testing and comparing various solutions.

We have chosen to benchmark it against NIXTLA’s StatsForecast library. This library stands out due to its focus on performance and scalability, key factors for any forecasting tool.

Furthermore, when considering prominent industry players, we’ve looked into how NIXTLA stacks up against Amazon Forecast. StatsForecast by NIXTLA claims to surpass AmazonForecast not only in terms of accuracy, as measured by error margins, but also in fitting and inference times.

For the purpose of this benchmark, we rely on two distinct datasets to ensure a thorough and fair comparison.

Benchmarking on Walmart Dataset from the M5 Competition

The Walmart dataset, coming from the M5 competition, features daily sales data spanning from 2011 to 2016 and covers over 30,000 products. Our objective with this forecasting challenge is to predict day-ahead demand for a withheld segment of the dataset.

Blockbax’s forecaster is designed for scalability, capable of processing vast volumes of data without requiring it all to be loaded into memory at once — a common limitation among many existing tools and libraries. To streamline our testing process and manage resource usage, we’ve opted to work with a randomly selected subset of the full dataset, ensuring reproducibility by using a consistent random seed.

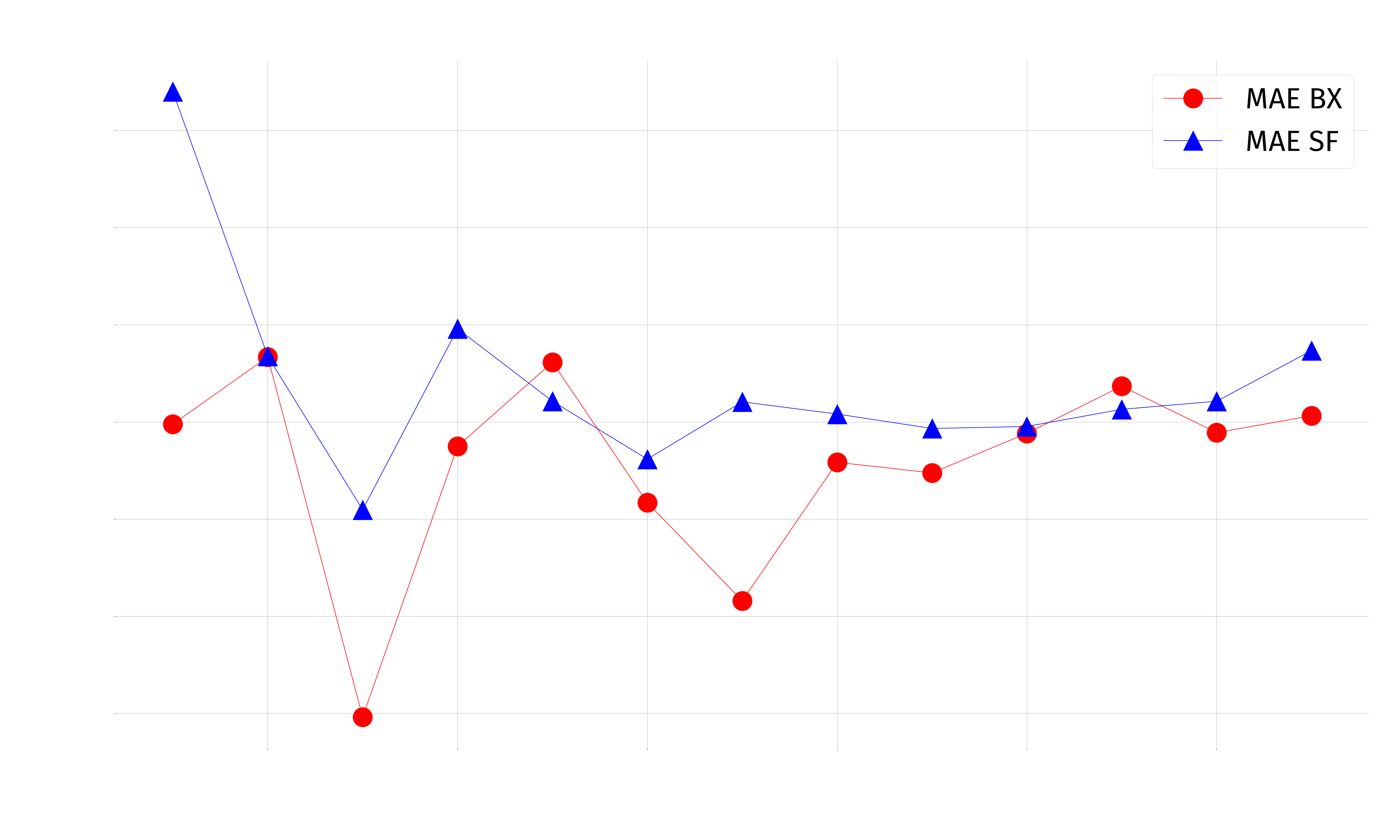

For our benchmark, we implemented models from StatsForecast namely DynamicOptimizedTheta and AutoETS, mirroring the approach NIXTLA used in their benchmarks. We gradually increased the number of products forecasted to evaluate the impacts on performance and accuracy over time for both systems.

We measured mean absolute error (MAE) across growing subset of product demands.

In almost all cases, Blockbax models demonstrated lower mean absolute error compared to those from StatsForecast, despite the latter being fine-tuned to capture seasonal trends over longer periods (such as yearly cycles). Notably, Blockbax achieves this with a default feature set that isn’t explicitly optimized for long-term seasonality, instead focusing its initial version more on shorter-term forecasts without specialized long seasonal features like StatsForecast.

Finally, the limit on the number of items we could train on shows another potential of Blockbax forecasted metrics. Many tools require the entire dataset to be present in-memory for optimal performance or functionality, but this isn’t the case with Blockbax. This limitation ruled the ultimate capping on the dataset size due to hardware constraints.

Benchmark on Blockbax Climate Monitoring

The Blockbax climate monitoring dataset includes a calculated metric designed to mimic real-world data by incorporating predictable inputs with underlying trends, seasonal patterns, and an element of randomness to simulate environmental noise.

To compare the effectiveness of our forecasting methods, we used Blockbax’s end-to-end forecasted metric, which streamlines the process and eliminates the need for coding. Users can easily generate a forecasted metric graphically by using the metrics interface.

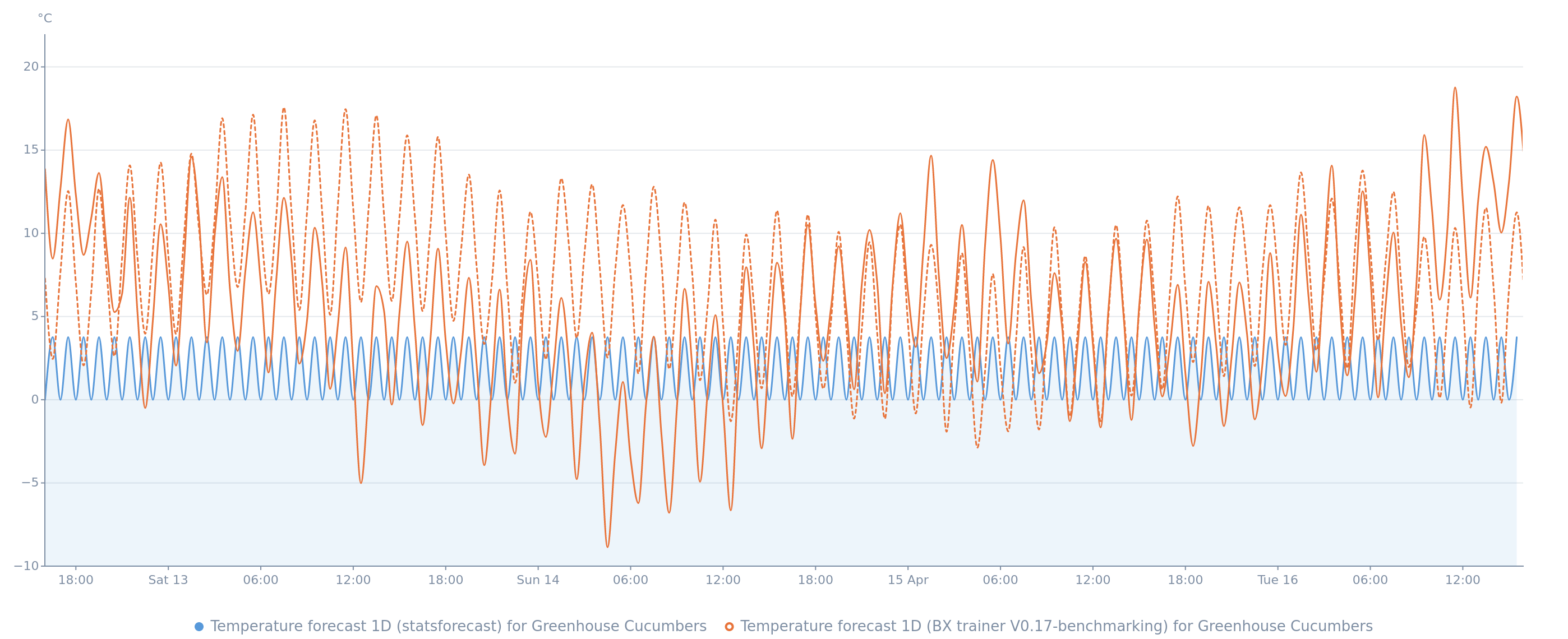

For a side-by-side comparison, we exported the same training data from the Blockbax forecasted metric and employed it within statsforecast to fit their model. Afterwards, we reintegrated the statsforecast prediction results for the test set (in this case, the last month’s data) back into Blockbax.

Blockbax displays the calculated errors for its forecasted metric directly on the metric overview page. To assess the accuracy of forecasts ingested from Statsforecast, we leverage the Blockbax public API. This allows us to retrieve both observed and forecasted values, enabling us to compute the mean absolute errors for each tool. The resulting error metrics are as follows:

| Day Ahead Temperature Forecast | Blockbax | Statsforecast |

|---|---|---|

| Mean Absolute Error (°C) | 4.4643 | 6.4997 |

In the visualization below, Blockbax forecasted temperatures are plotted with a dotted line and observed values in orange, while Statsforecast predictions appear in blue.

While error metrics like mean absolute error are important indicators of model performance, they are not the sole determinants of forecasting ability. For instance, a baseline model that simply carries forward the current value to predict the next can yield an acceptable error score without actually providing any true predictive capability.

It is clear from the analysis that both models effectively capture the seasonality of the target metric, meaning they accurately track the periodic fluctuations within the data without considerable lag. However, the Statsforecast model appears to apply a consistent amplitude to its predictions, indicating it does not fully adapt to the varying trends present in the dataset.

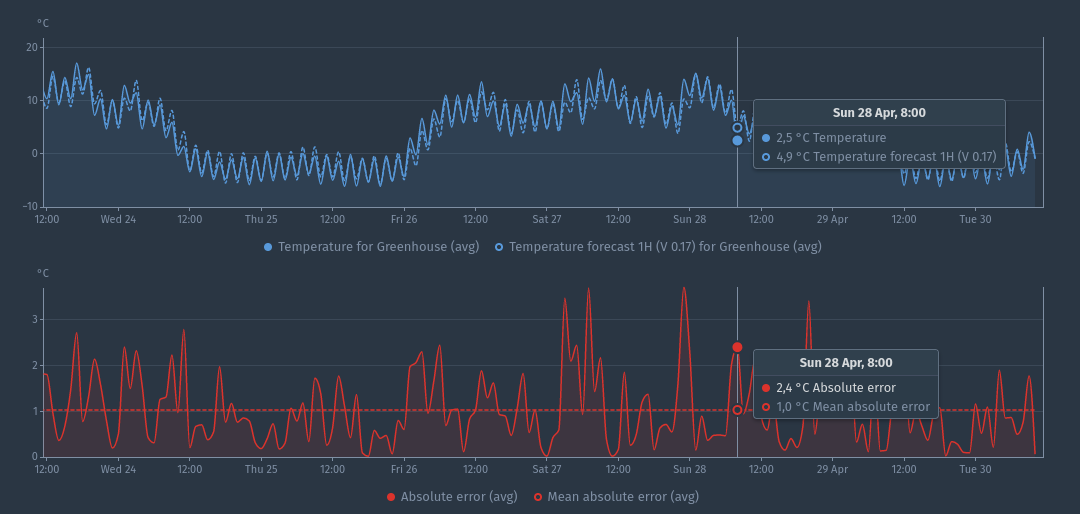

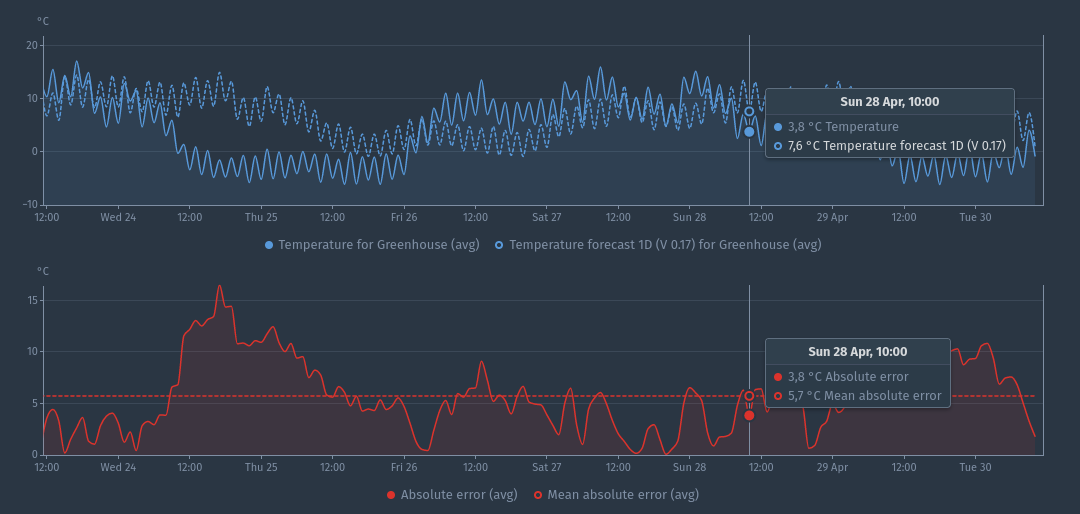

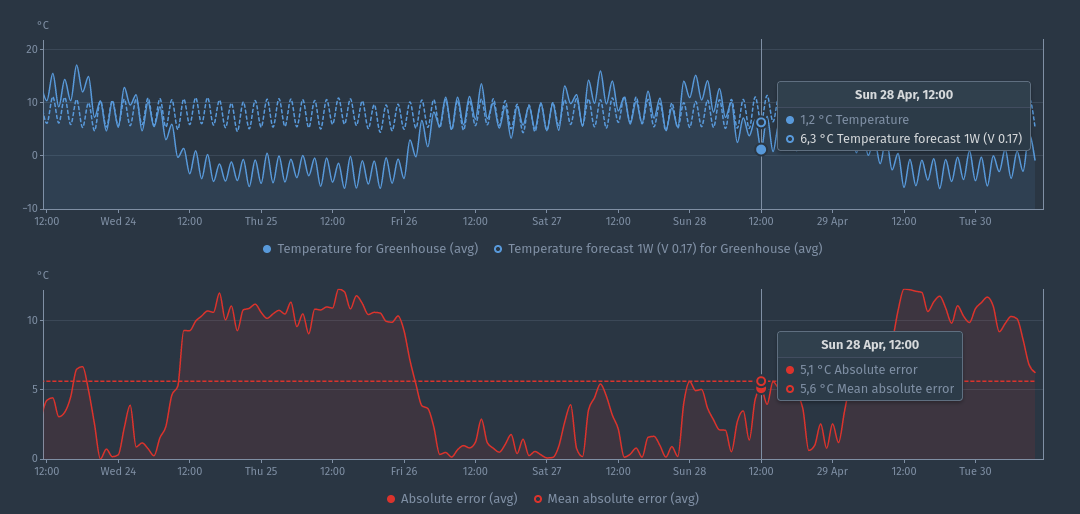

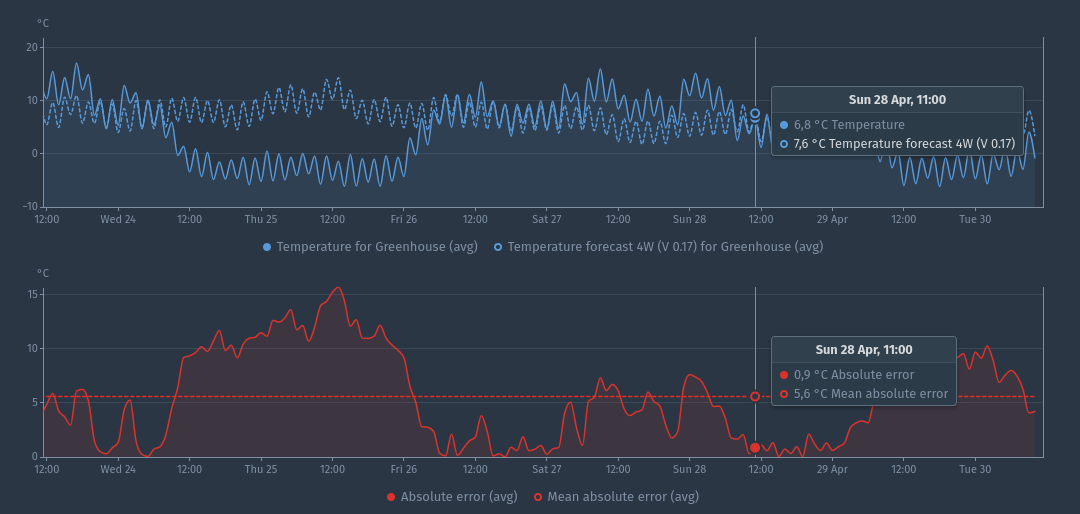

An additional consideration is the inherent random-walk noise component found within the dataset. Typically, the more extended the forecasting period, the greater the cumulative impact of this noise, negatively influencing model accuracy. Consequently, shorter forecasting intervals tend to produce more reliable results. This can be seen on the reported temperature forecast case over different forecast periods:

Summary and future plans

We benchmarked our forecasting solution against NIXTLA’s StatsForecast and Amazon Forecast, using the Walmart M5 dataset and Blockbax Climate Monitoring data. Our solution demonstrated lower errors and enhanced scalability compared to StatsForecast, showcasing better performance. Continuous model improvements and further comparisons are planned to maintain the leading edge in forecasting accuracy.

Looking ahead, we are committed to refining our feature engineering techniques, such as incorporating temporal features designed for extended time periods to better capture seasonal patterns. The deployment of more sophisticated models and improved hyperparameter tuning is also on our roadmap.

We welcome any feedback, inquiries, or suggestions you may have. Please feel free to contact us. Your input is invaluable as we continuously evolve our forecasting capabilities. Stay tuned for upcoming advancements!