Docs

Apache Kafka

Apache Kafka is an open-source distributed event streaming platform for high-performance data pipelines. In this guide we will show you how you can set up integrations to let your data (such as measurements and events) flow in and out of Kafka. In this tutorial we use the Confluent Platform as an example. This guide should be usable for self-hosted or other managed Kafka providers as well, in case you need help contact us.

Goal

The goal is to set up an inbound and outbound integration between Kafka and Blockbax.

Prerequisites

- Blockbax project (with administrator access)

- A Kafka cluster

Steps to stream data from Kafka to Blockbax

Several steps in both Confluent Platform and Blockbax are needed to get the data from Kafka to Blockbax.

- Login to your confluent account and go to the cluster that you want to connect to

- On the (left) sidebar click on ‘API Keys’ and click on the ‘Add key’ button on the top right

- Click on the granular access button and connect it to a service account (or create a new one)

- Give the account access to READ the consumer group that is going to be used and the access to READ the topic with the data on it

- Copy the key and secret that were generated

With the account created we can now set up the connector in Blockbax

- Go to settings in the project

- Navigate to the tab ‘Inbound connectors’ and click on the green ‘+’ in the top right

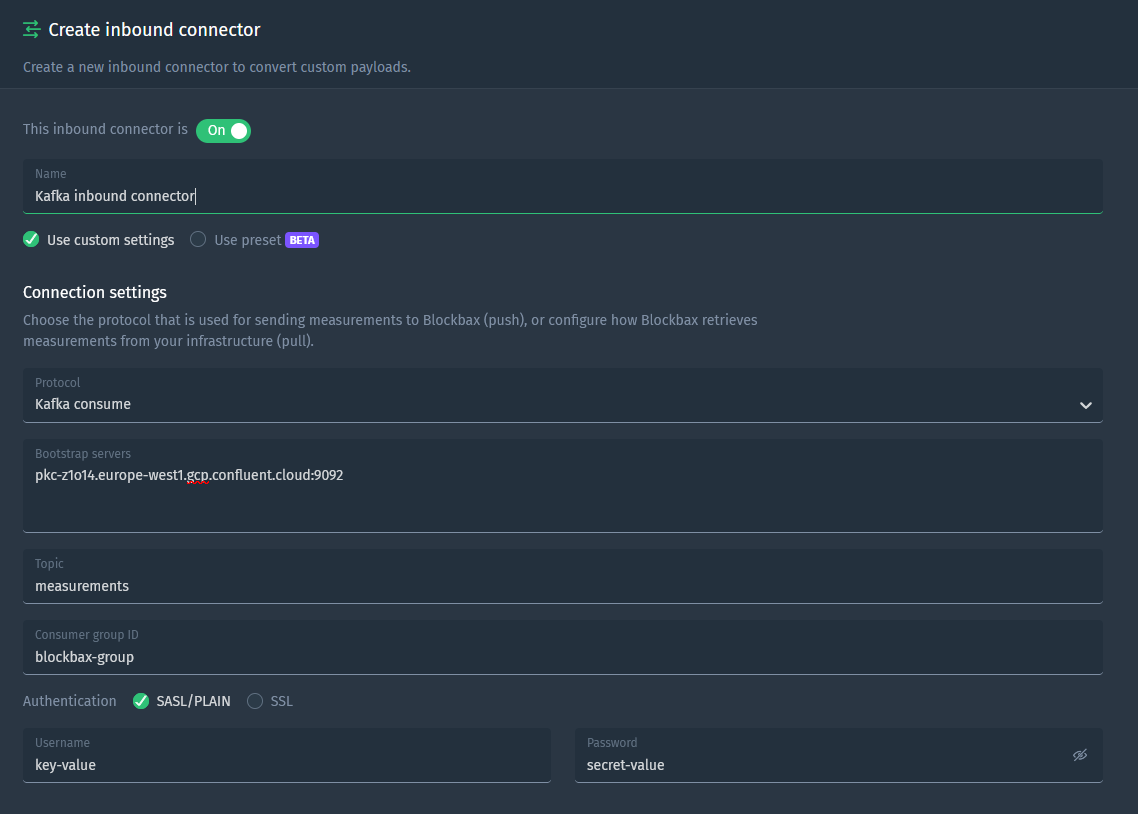

- In the setting ‘Protocol’ select the option ‘Kafka consume’

- Choose a suitable name for the inbound connector

- Select the ‘SASL/PLAIN’ option (should be already selected) and fill in the ‘username’ and ‘password’ fields with the Confluent key and secret respectively

- (optionally) you can also authenticate by using client certificates, this can be done by selecting ‘SSL’ and uploading the client certificate (

pfxformat) and the trust store certificate chain (pemformat) - Fill in the rest of the fields. The bootstrap servers can be found under cluster settings (in the sidebar), the ‘Topic’ and ‘Consumer group ID’ field should match the authorization of the API key that was created

- Write a payload conversion script to convert the incoming payload to the correct Blockbax format. When using the Avro format an optional schema registry url has to be provided. More information can be found here

- Click the ‘Create connector’ and validate if the ‘Successfully (re)connected inbound connector, will start processing measurements.’ log shows up. This can take up to 30 seconds. If you encounter any issues feel free to contact us

Steps to stream data from Blockbax to Kafka

To stream data out of blockbax to Kafka, the same steps have to be taken as with consumer, only the topic READ access has to be replaced with topic WRITE access (no consumer group info has to be supplied). After this streaming the data out of blockbax can be setup in the following way:

- Go to settings in the project

- Navigate to the tab ‘Outbound connectors’ and click on either of the ‘Create connector’ buttons, depending on if you want to stream out events or measurements

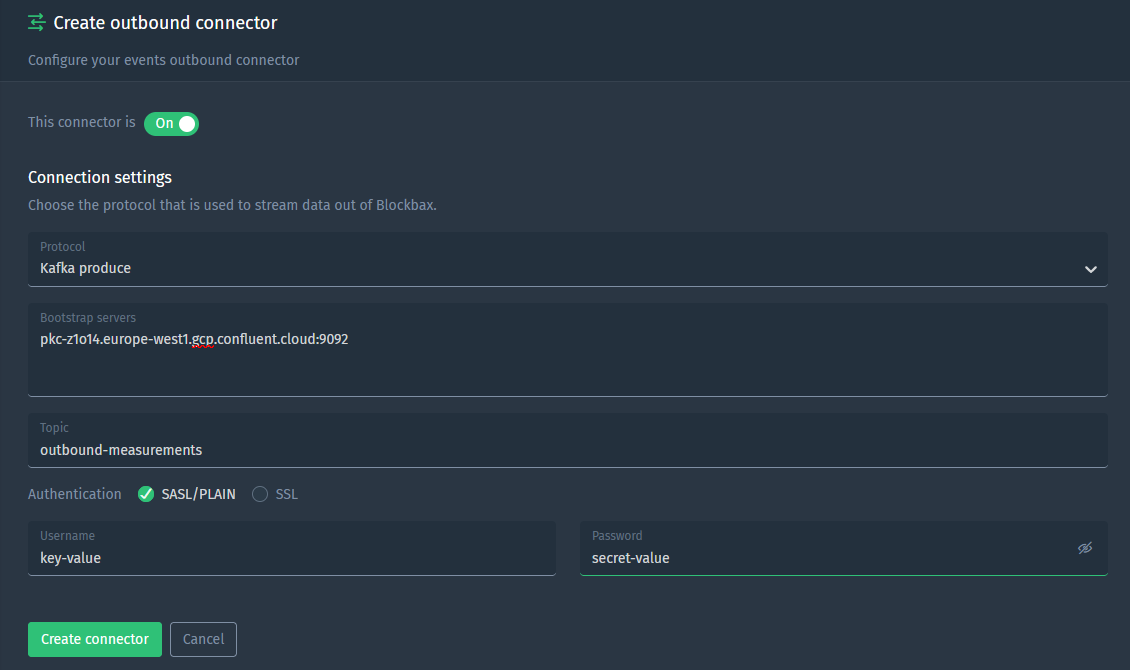

- In the setting ‘Protocol’ select the option ‘Kafka produce’

- Fill in the bootstrap servers (same as in the previous paragraph) and the topic that the data needs to be produced to

- Fill in the authentication options. This can be either ‘SASL/PLAIN’ or ‘SSL’

- Press ‘Create connector’ and validate if data is coming into you kafka cluster. If not, feel free to contact us